EPiRobots - A generic robots.txt handler for your EPiServer CMS 6 R2 site

Jul 14, 2011

EPiRobots is an EPiServer plug in that handles delivery and modification of the robots.txt file for your EPiServer CMS 6 R2 site(s). It has no dependency on any page types, requires no .config modifications and should work in any EPiServer CMS 6 R2 deployment scenario.

Why did I create it?

People have written about about handling robots.txt for EPiServer before here and here. They all fulfill a certain need but require some kind of modification to an EPiServer install. However I wanted a generic solution that was simply plug and play for any EPiServer CMS 6 R2 site (whether that be a single site, load balanced, multi-site or a full enterprise scenario using mirroring). I simply wanted a reusable module that I could add to my site that meant SEO guys, site admins and developers alike would be happy with the fact that robots.txt was handled.

The requirements

So I set out to create EPiRobots which in its simplest terms is a robots.txt plug in for EPiServer CMS. My requirements were:

- Plug and play (i.e. no .config mods required)

- No dependency on page content and/or properties

- Allows site admins to edit the robots.txt from EPiServer admin mode

- Works in any deployment scenario (single server, load balanced, multi-site and mirrored)

- Plugs in to any EPiServer installation (CMS 6 R2 upwards)

So what does it do?

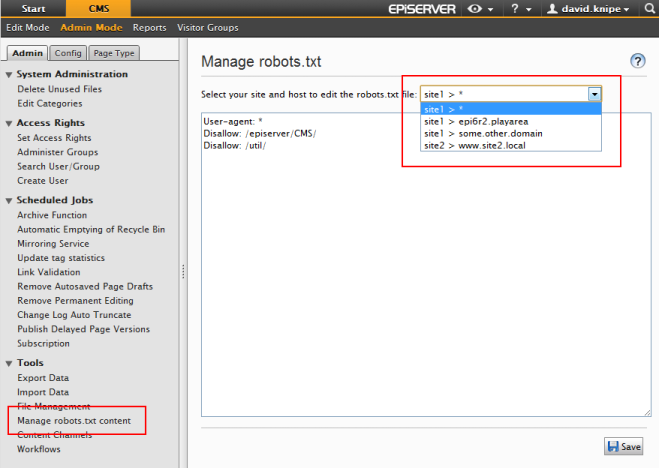

This solution adds a new option into admin mode called "Manage robots.txt content". This allows site administrators to edit the robots.txt content that's delivered for their site(s). The screen shot below shows the admin mode plug in that is used to edit robots.txt content. It shows robots.txt content can be edited for each siteId and all mapped host name(s) of that site. This allows site administrators a control over what robots.txt content is delivered for each configured site, but also for each mapped host name serving that site:

It also hooks in to the URL rewriter module to render the robots.txt content when a request is made to www.yoursite.com/robots.txt. This approach also avoids the need to modify the web.config file when deploying.

Features:

- By default creates some "simple" robots.txt content that excludes EPiServer specific URLs

- Works in multi-site scenarios

- Works with EPiServer mirroring

- Uses DDS to store robots.txt content

- Uses the EPiServer cache manager to cache content to ensure it works when load balancing and for good performance

- Allows different robots.txt content to be delivered depending on the mapped host name (or the default "*")

How do I get it?

The best place is on the EPiServer Nuget feed. Alternatively you can download the Nuget package and/or source over at the EPiRobots project on CodePlex: http://epirobots.codeplex.com/.

Feedback

Will this be useful in your day to day? Have I missed anything? I'd be happy to hear any feedback on the comments below or @davidknipe.